Learn about strings, files, and regular expressions. Key concepts in NLP, including tokenization and stemming.Īlong the way you will consolidate your Python knowledge and In order to address these questions, we will be covering How can we write programs to produce formatted output.Punctuation symbols, so we can carry out the same kinds ofĪnalysis we did with text corpora in earlier chapters? How can we split documents up into individual words and.How can we write programs to access text from local files andįrom the web, in order to get hold of an unlimited range of.

The goal of this chapter is to answer the following questions:

#Nltk clean text how to#

In mind, and need to learn how to access them. However, you probably have your own text sources To have existing text collections to explore, such as the corpora we saw import rpus nltk.download('stopwords') from rpus import stopwords stop = stopwords.words('english') data_clean = data_clean.apply(lambda x: ' '.join()) data_clean.The most important source of texts is undoubtedly the Web.

#Nltk clean text code#

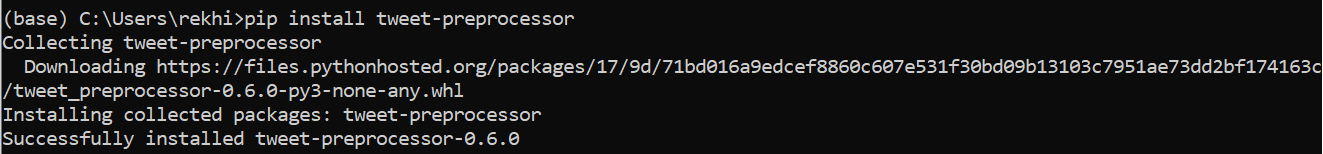

The code below uses this to remove stop words from the tweets.

The Natural Language Toolkit (NLTK) python library has built-in methods for removing stop words. This would be particularly important for use cases such as chatbots or sentiment analysis. For example, if we were building a chatbot and removed the word“ not” from this phrase “ i am not happy” then the reverse meaning may in fact be interpreted by the algorithm. This includes any situation where the meaning of a piece of text may be lost by the removal of a stop word. There are other instances where the removal of stop words is either not advised or needs to be more carefully considered. This is particularly the case for text classification tasks. Stop words are commonly occurring words that for some computational processes provide little information or in some cases introduce unnecessary noise and therefore need to be removed. import re def clean_text(df, text_field, new_text_field_name): df = df.str.lower() df = df.apply(lambda elem: \t])|(\w+:\/\/\S+)|^rt|http.+?", "", elem)) # remove numbers df = df.apply(lambda elem: re.sub(r"\d+", "", elem)) return df data_clean = clean_text(train_data, 'text', 'text_clean') data_clean.head() To keep a track of the changes we are making to the text I have put the clean text into a new column. If we include both upper case and lower case versions of the same words then the computer will see these as different entities, even though they may be the same. We need to, therefore, process the data to remove these elements.Īdditionally, it is also important to apply some attention to the casing of words. All of which are difficult for computers to understand if they are present in the data. Text data contains a lot of noise, this takes the form of special characters such as hashtags, punctuation and numbers. One of the key steps in processing language data is to remove noise so that the machine can more easily detect the patterns in the data.

0 kommentar(er)

0 kommentar(er)